Tech News

Here's How To Install Your Own Uncensored Local GPT-Like Chatbot

Key Takeaways

Artificial intelligence is a great tool for many people, but there are some restrictions on the free models that make it difficult to use in some contexts. Here's an easy way to install a censorship-free GPT-like Chatbot on your local machine.

Why I Opted For a Local GPT-Like Bot

I've been using ChatGPT for a while, and even done an entire game coded with the engine before. However, for that version, I used the online-only GPT engine, and realized that it was a little bit limited in its responses. In looking for a solution for future projects, I came across GPT4All, a GitHub project with code to run LLMs privately on your home machine. I decided to install it for a few reasons, primarily:

My data remains private, so I don't have to worry about OpenAI collecting any of the data I use within the model. Responses aren't filtered through OpenAI's censorship guidelines. I can use the local LLM with personal documents to give me more tailored responses based on how to write and think. If I'm disconnected, I still have an LLM on my local machine that I can use for whatever I need.If you're like me and want a local version of your favorite LLM that doesn't censor itself, here's a breakdown of how to do it.

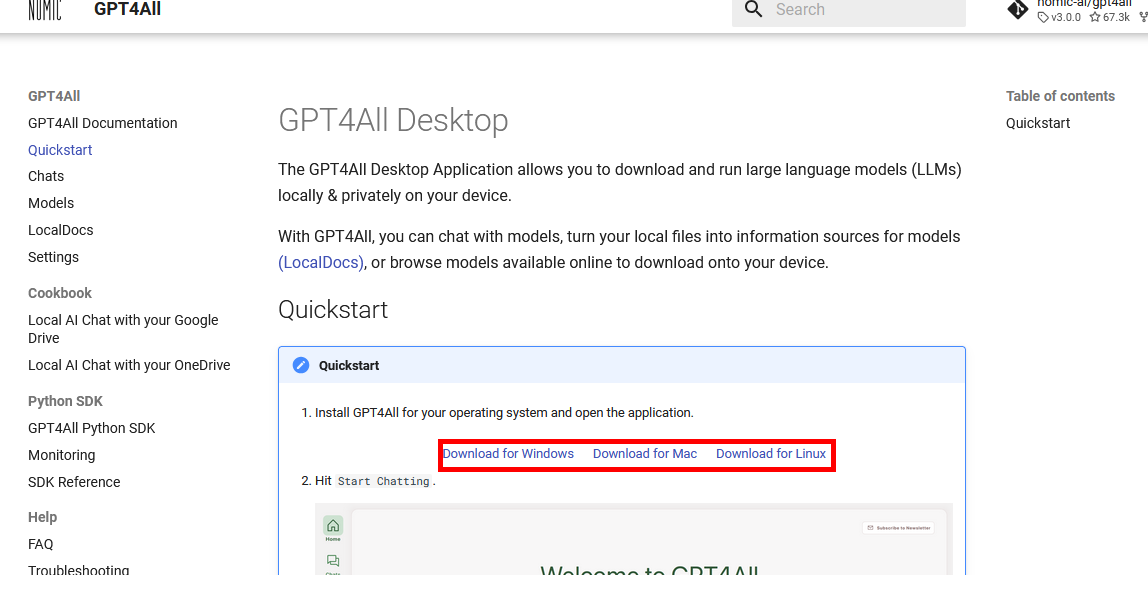

Getting started with this is as simple as downloading the package from the GPT4All quick start site. Downloading the package is simple and installation is a breeze. Just be aware that you'll need around 1GB of storage space for the base application without any of the models. Once you pick a location for your installation, you'll have to wait for the repository to download all the files.

Thanks to how Nomic did the development, the installation will work on the most common operating systems. There are distributions on the quick start site for Windows, as well as Linux and macOS. The space requirement is the only thing you'll need to pay attention to before installing.

Running GPT4All

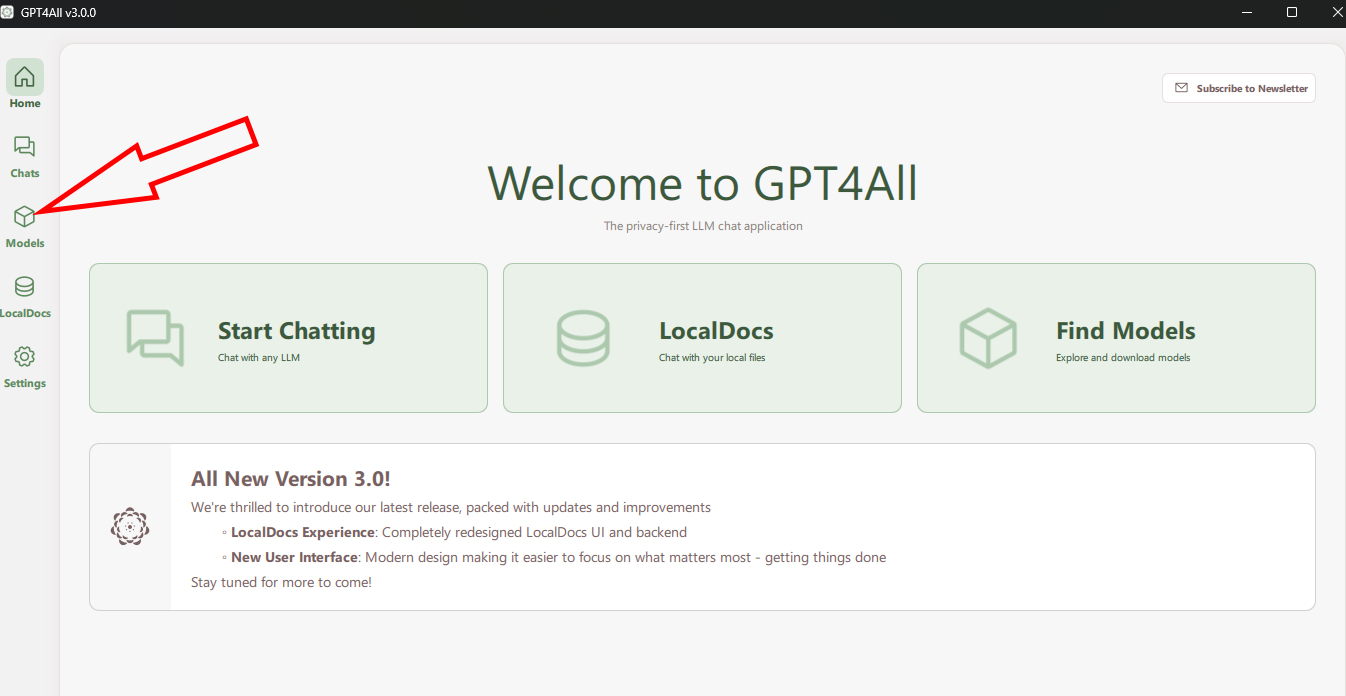

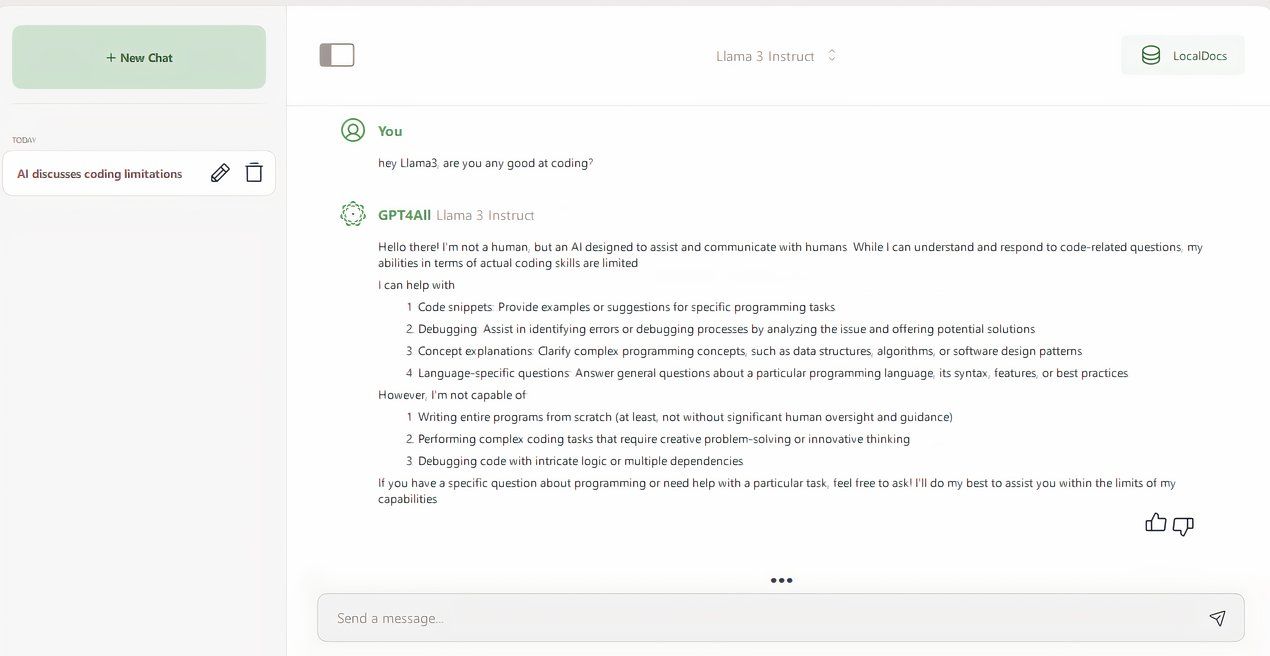

Once you've completed the installation, running GPT4All is as simple as searching for the app. Starting it up prompts us with a few options, like feeding it local documents or chatting with the onboard model. Along the left side of the window, we can access other chats we've had previously, and check out the local documents we've used to update our local AI chatbot. However, the most important thing you have here is the models tab.

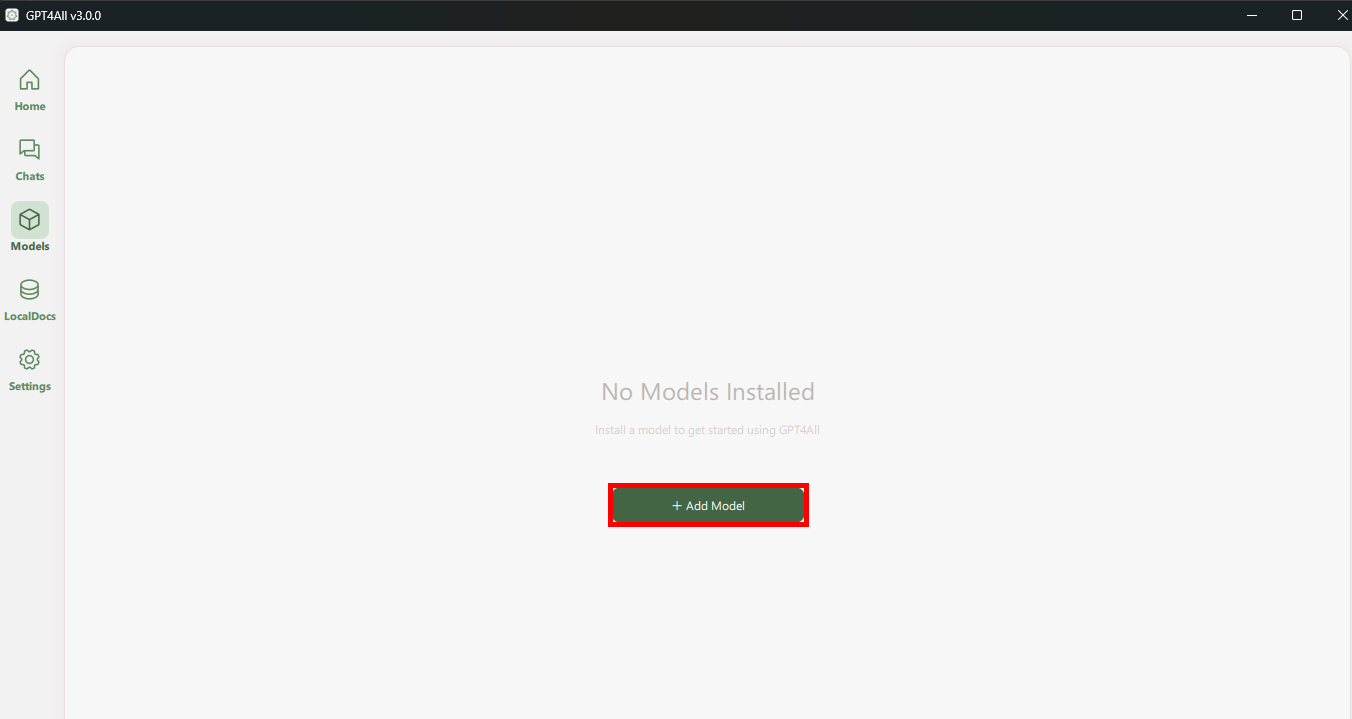

By default, you start with no models installed, but you'll quickly realize that you have access to a wide variety of models that you can download and use, completely for free. Let's look at how you update this empty chatbot into something truly spectacular.

Getting New Models For Your Local Chatbot

The models tab on the top left of the screen will open an area that allows you to scan through the available models. Each of the models will have a descriptor that tells you what the model offers and what it's suited for. It'll also give you an idea of the size (which ranges from 2GB all the way to a whopping 7GB of space) that you'll need for the model installation.

Since we're going for a complete offline model, I'd suggest using a Mistral (famous for HuggingChat) model. Why should one avoid using actual ChatGPT? Let's unpack the reasons.

First, ChatGPT 4 requires an API access key, which you must pay for. The 3.5 model is available for free, but its processing scope is limited. GPT4All also allows users to leverage the power of API access, but again, this may involve the model sending prompt data to OpenAI. Local documents will only be accessible to you. OpenAI claims that none of the data it collects via API will be used to train its LLM, but the only guarantee you have is the company's word.

Despite ChatGPT being one of the most well-known chatbots, it isn't the only AI engine available to you. Because of the sheer versatility of the available models, you're not limited to using ChatGPT for your GPT-like local chatbot.

Models like Llama3 Instruct, Mistral, and Orca don't collect your data and will often give you high-quality responses. Based on your preferences, these models might be better options than ChatGPT. The best thing to do is experiment and determine which models suit your needs. Remember, you'll still need the space to store the model, so don't have several models on your machine if you only use one.

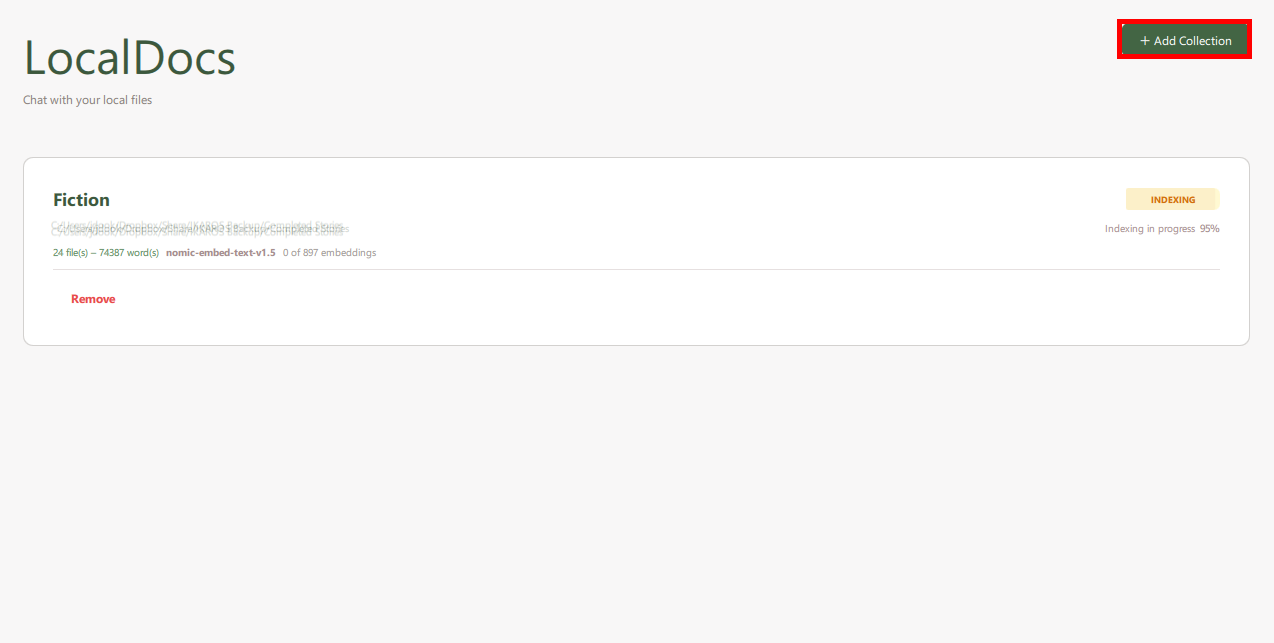

Set Up Access for Local Documents

If you're planning to use your local chatbot to help you with your document creation or guide you on other things, you'll first need to set up a document collection. Luckily, GPT4All makes this simple. Just put all the documents you want the model to access into an easy-to-access folder, and point the engine to the folder using the "Add Collection" button. Processing time will vary by the amount of documents the folder has, but it shouldn't take too long.

Once you've added your local document folder, your chatbot will be able to access and read the documents and advise you on anything you ask it. This is pretty useful if you want to learn about a particular subject but don't have the time to dig through an encyclopedia of books. It's much more efficient to chat with the content and get what you want out of it.

Tips for Using Your Local Chatbot Effectively

Chatbots are only as useful as you make them. I found that there were a few things that new users of an offline chatbot would need to know.

One of the most important things that I haven't mentioned yet is that GPT4All runs on a minimum of 8GB of RAM. While you can attempt to run the bot on less than that, it's definitely not recommended.

You can build individual collections for different document categories. For example, if you have a set of documents you use for a casual project, and one you use professionally, the bot can develop two styles of response based on what you require. Each document collection can be processed individually to avoid overlap.

Finally, remember to keep your models updated.

Every now and again, you should check for updated models for more efficient chatbot use. Newer models may also come with updated use cases, so reading the release notes will help you adapt them to what you want them to do. Remember, if you're using a new model, you'll have to reprocess the documents using that model. There's no backward compatibility with older models.

Chatbots can be powerful ways to fast-track learning or help with creative enterprises like writing a novel. However, they're still only tools and are only as good as the data they work from and the models that do the processing. Having a local chatbot gives you the power to control the data the bot has access to and learns from. It also makes for more specific responses than you'd get from online models. Once you keep them up to date, they'll serve as great assistants.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.

Jason

Dookeran/How-To-Geek

Jason

Dookeran/How-To-Geek Lucas

Gouveia

/

How-To

Geek

Lucas

Gouveia

/

How-To

Geek

Comments