Tech News

ChatGPT Advanced Voice Is GreatBut I Feel Cheated

Key Takeaways

OpenAI's latest offering promised a sci-fi-like experience, but the reality falls short of the hype. We were promised an AI assistant that could see the world and talk like a human. Instead, we got a blind chatbot that just says, “Sorry, I can’t do that,” in nine new emotive voices.

The Promise We Made With GPT-4o

When OpenAI unveiled GPT-4o in May 2024, it felt like we were on the cusp of the next big tech revolution in human-computer interaction. It was a sci-fi story come true where we saw an AI assistant that can actually engage in human-like interactions—like in the movie Her.

Here’s a remidner of everything that was demoed:

Real-time voice conversations. The ability to interrupt and redirect conversations naturally. A lifelike voice with an extensive emotional range. Dramatic storytelling and singing abilities. Enhanced multilingual capabilities and translation. Improved performance in non-English languages. Vision capabilities: Solving math problems written on a piece of paper. Reading facial expressions and understanding environments. Smarter than GPT-4 Turbo,the flagship OpenAI model before GPT-4o.As a complete package, GPT-4o felt like it’d bring a paradigm shift to how we use and interact with our computers. The demos were nothing short of mind-blowing, and my mind was racing with limitless possibilities.

For example, I could finally cancel my Calm subscription and use ChatGPT to tell me bedtime stories. I could use it as my personal trainer—place it on a desk to keep track of my reps and posture. It could sing my lyrics while I created tunes with Udio.

Needless to say, I was beyond thrilled. This was the future I was waiting for!

...But the Promise Is Yet to Be Delivered

Fast-forward a week after the big announcement, and I finally got my hands on GPT-4o. OpenAI stated that they’d slowly roll out everything they demoed. So, this GPT-4o was text-only. It could accept text and images as input and reply back in text.

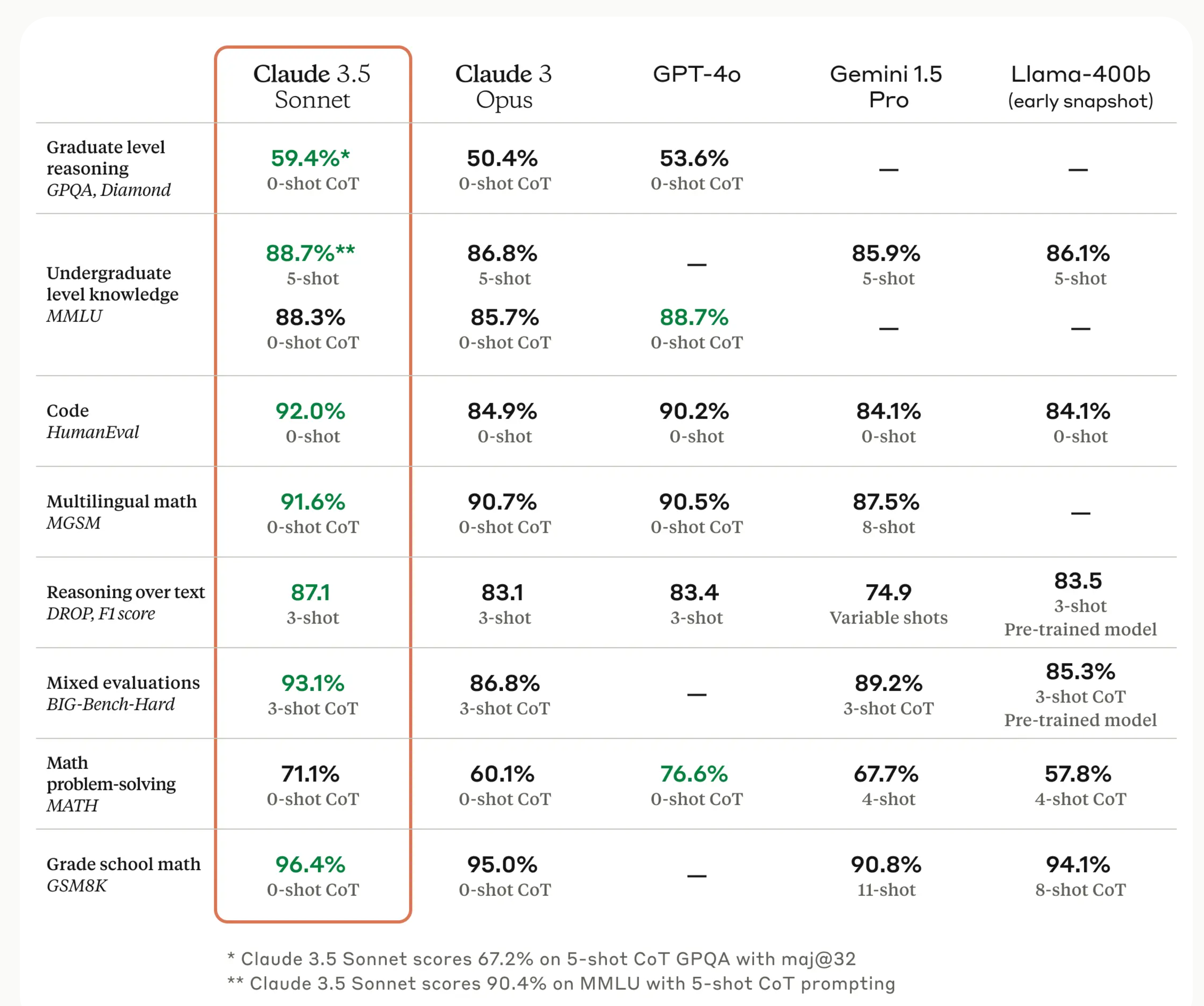

It was smarter than the previous model but wasn’t the sci-fi assistant we were promised. To add insult to injury, its “smarter” crown was quickly snatched a month later by Claude 3.5 Sonnet—which outperforms GPT-4o in both coding and creative tasks!

Finally, in July, Advanced Voice started rolling out in alpha to a select few users. But again, it was just the voice—no vision abilities in sight. Sure, it was better than the old Standard Voice mode, where you had to wait ages for it to respond, but it wasn't exactly groundbreaking.

September 2024 saw a wider rollout with the final version, and I got my hands on it. Though, can we really call this final when it's still not delivering on the initial promise?

My Experience With ChatGPT Advanced Voice and Why I Feel Cheated

Let's be clear—the fully rolled-out Advanced Voice feature is impressive. The voice quality is undeniably the most human-like AI voice on the market. Conversations feel eerily natural, like I'm talking to another person.

Unfortunately, it is not that useful in practice. There’s still no vision capability, which drastically limits what you can do with it. And I get it; the feature is called Advanced Voice, so I shouldn’t have hoped it would be able to see stuff. However, it can’t even see images you uploaded to a chat. Even Standard Voice can do that!

Also, the voice isn’t that advanced. It's still using GPT-4o as its brain, which, let's face it, isn't the sharpest tool in the AI shed anymore. Advanced Voice also refuses to sing. It feels like OpenAI has no intention of adding singing as a feature despite showing that off in its demo. Here's how it responded when asked.

Can I generate musical content with voice conversations?

No. To respect creators' rights, we've put in place several mitigations, including new filters, to prevent voice conversations from responding with musical content, including singing.

While the limitation is likely due to potential legal issues, it’s ridiculous that this assistant of mine won’t even sing me “Happy Birthday!” OpenAI could’ve at least allowed it to sing stuff in the public domain. Looks like I’m stuck with Google Assistant for birthday wishes!

Now, coming to voice modulation—it’s good! Again, it’s better than anything else on the market, but not exceptional. I tried to get it to talk like Marvel's Venom, and it was hit or miss. Sometimes, it said "sure" and tried to sound like him—never got the voice right, though. Other times, it’d tell me that it can’t impersonate specific characters or celebrities.

Your browser does not support the video tag.

Fair enough, I guess—so I tried asking for a gurgling voice with a lot of vocal fry to see if that worked, but it still didn’t sound right. I even tried to play around with different vocal parameters to see if I could nail the sound, but it was a failed experiment. While there is a good amount of flexibility, you can’t stretch it enough to get those unique vocals.

Also, you need to be very precise with the language you use. It won’t take your requests to mimic or impersonate a known figure, but if you ask it to try to talk like someone, it does try to modulate its voice.

If all these limitations aren’t enough, you also have to deal with bugs. Sometimes Advanced Voice takes forever to load, forcing me to quit and try to connect again. It's also not great for telling stories or delivering long monologs. After a minute or so, it'll just stop talking unless you repeatedly tell it to keep going on. So much for my plans to use it as a bedtime storyteller!

There’s Still a Silver Lining

Thankfully, it isn’t all bad! You get tons of new voice options, each with its own personality and voice modulation range. Currently, my go-to voices are Maple, Arbor, and Vale. While I couldn’t make any of them sound like Venom, I did manage to get Arbor to sound like an anti-hero, which was pretty cool.

It's also great for thinking out loud! Having an always-available conversation partner, even if it's not the sharpest, is pretty nifty. It's like having Sherlock's skull to bounce ideas off of.

While Advanced Voice isn’t what GPT-4o was demoed to be, it’s still powerful and potentially useful in some scenarios. I can see how others might use it for basic storytelling for children, language learning, or quick translations. Those are legitimate use cases, and anyone seeking them won’t feel disappointed.

In the end, ChatGPT Advanced Voice is a step forward, but it's a much smaller step than what we were promised. It's a reminder that in the world of AI, we should always take grand promises with a grain of salt. Here's hoping that future updates will bring us closer to that sci-fi assistant we were all dreaming of.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.

Comments