Tech News

Install and Use AI Chatbots at Home With Ollama

Key Takeaways

You can unlock the power of AI without a tech background! Using Ollama, anyone can train AI models tailored to their needs. It’s easy to use, runs on your own device, and lets you create smarter, customized solutions—no coding expertise required!

Why Run a Local Bot?

Whether you're fully into the AI hype or think it's all a bunch of hot air, AI tools like ChatGPT and Claude are here to stay. Running a local AI chatbot offers some tangible benefits.

Data Privacy: Running a chatbot locally keeps your data on your own device. Doing this means your private sensitive information isn’t sent to external servers or cloud services. Offline Usage: Using a local AI chatbot allows you to use it without an internet connection, which is handy if your connection is limited or unreliable. Customization: You can fine-tune it to suit your specific needs or integrate it with special, proprietary datasets. This makes the chatbot fit for your use. Cost Efficiency: Many cloud-based AI services charge to use their API usage or have subscription fees. Running a model locally is free. Reduced Latency: With a local AI model, there’s no need to make requests to an external server. This can significantly speed up the time it takes the chatbot to respond, making the experience smoother and more enjoyable. Experimentation and Learning: Running a local chatbot gives you more freedom to experiment with settings, fine-tune the model, or try different versions of the AI. This is great for developers and hobbyists who want hands-on experience with AI technology.Key Considerations When Using Large Language Models

An AI large language model (LLM), big or small, can be resource-heavy. They often require powerful hardware like GPUs to do the heavy lifting, a lot of RAM to keep the models in memory, and significant storage for growing datasets.

Parameters are values the model adjusts during training. More parameters lead to better language understanding, but larger models require more resources and time. For simpler tasks, models with fewer parameters, like 2B (billion) or 8B, may be sufficient and faster to train.

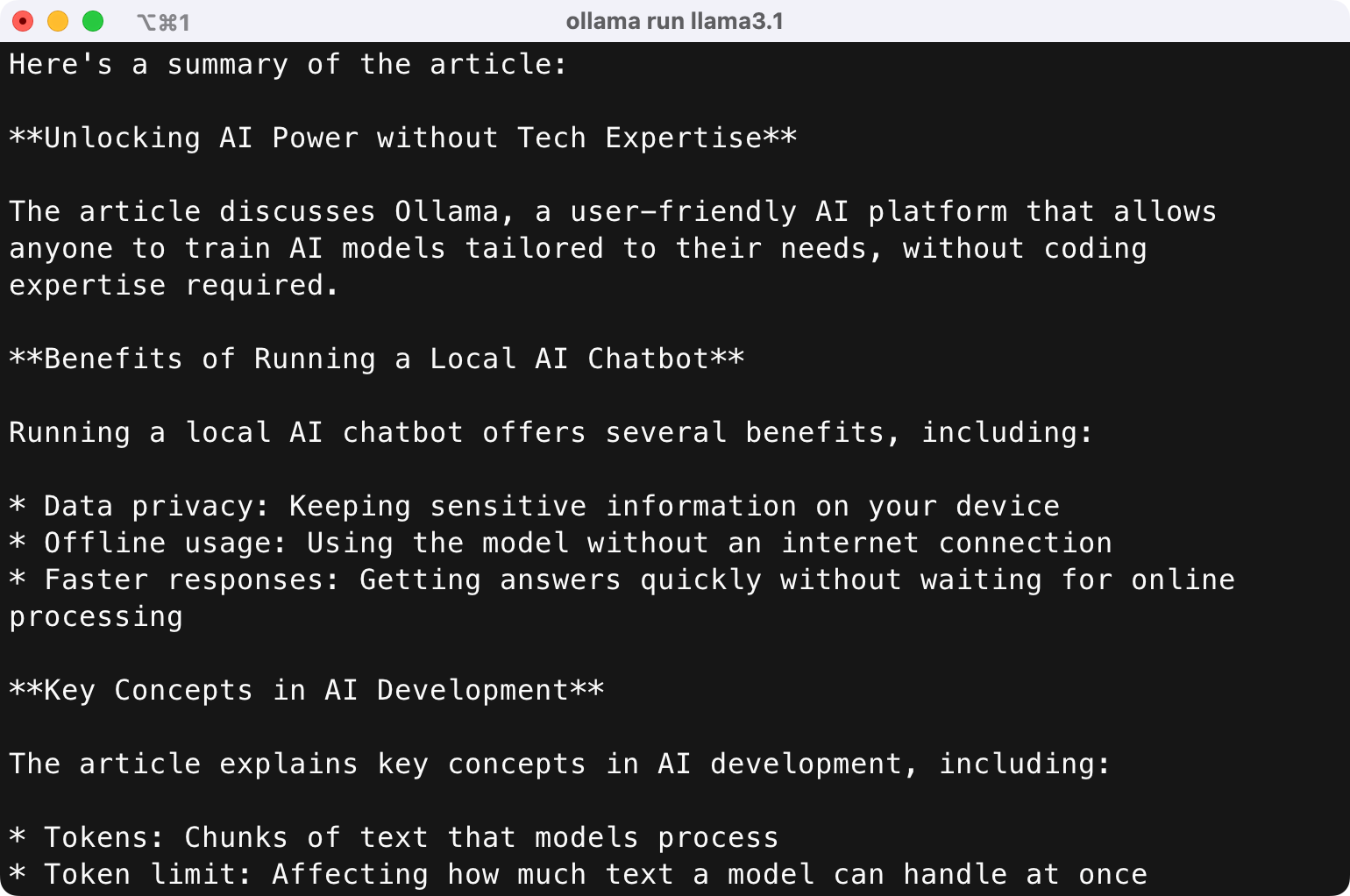

Tokens are chunks of text that the model processes. A model’s token limit affects how much text it can handle at once, so larger capacities allow for better comprehension of complex inputs.

Lastly, dataset size matters. Smaller, specific datasets—like those used for customer service bots—train faster. Larger datasets, while more complex, take longer to train. Fine-tuning pre-trained models with specialized data is often more efficient than starting from scratch.

Getting Ollama Up and Running

Ollama is a user-friendly AI platform that enables you to run AI models locally on your computer. Here’s how to install it and get started:

Install Ollama

You can install Ollama on Linux, macOS, and Windows (currently in preview).

For macOS and Windows, download the installer from the Ollama website and follow the installation steps like any other application.

On Linux, open the terminal and run:

curl -fsSL https://ollama.com/install.sh | shOnce installed, you’re ready to start experimenting with AI chatbots at home.

Running Your First Ollama AI Model

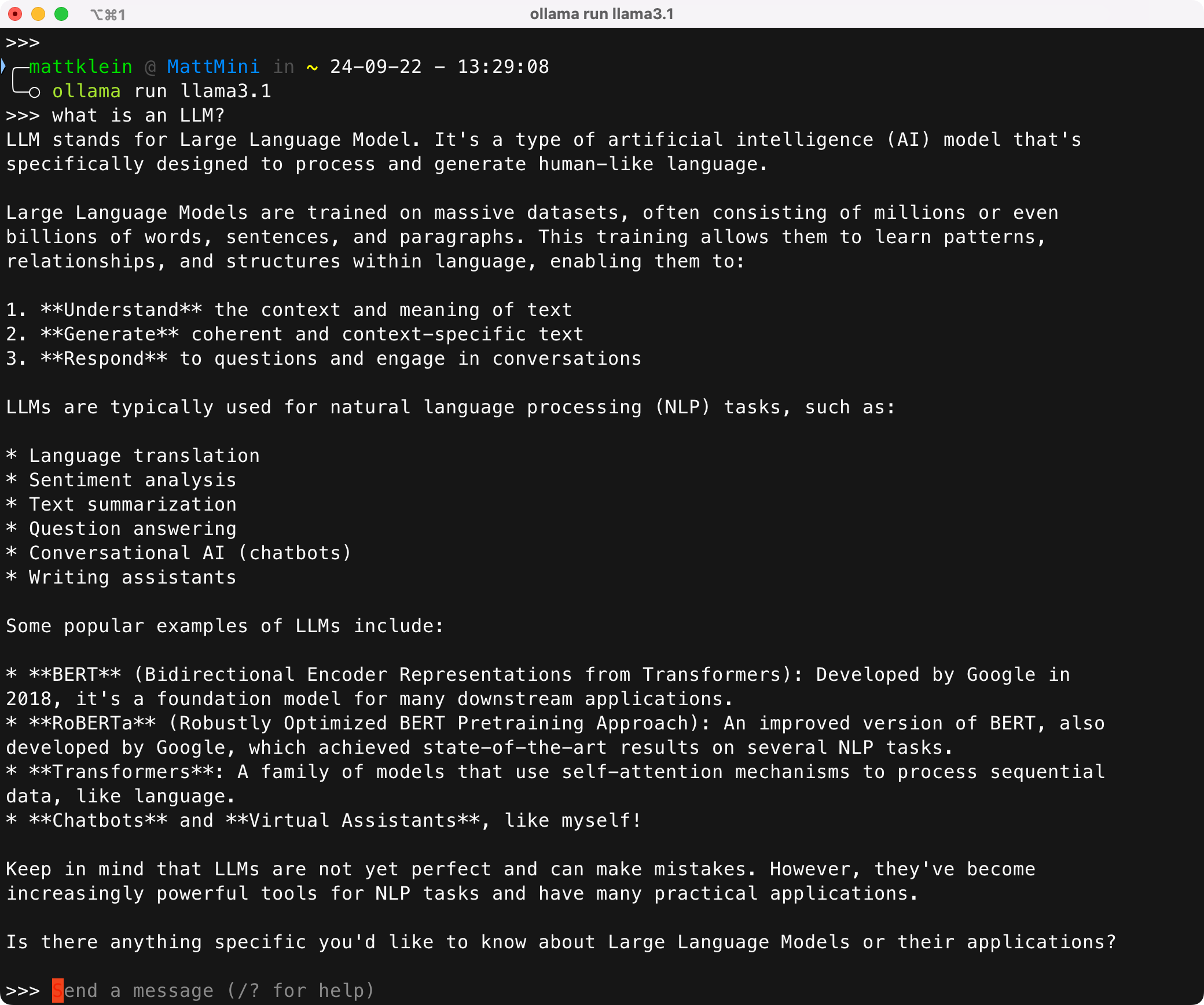

Once you install Ollama, open the terminal on Linux or macOS, or PowerShell on Windows. To start, we'll run a popular LLM developed by Meta called Llama 3.1:

ollama run llama3.1Since this is the first time you're using Ollama, it will fetch the llama 3.1 model, install it automatically, then give you a prompt so you can start asking it questions.

Running Other Models

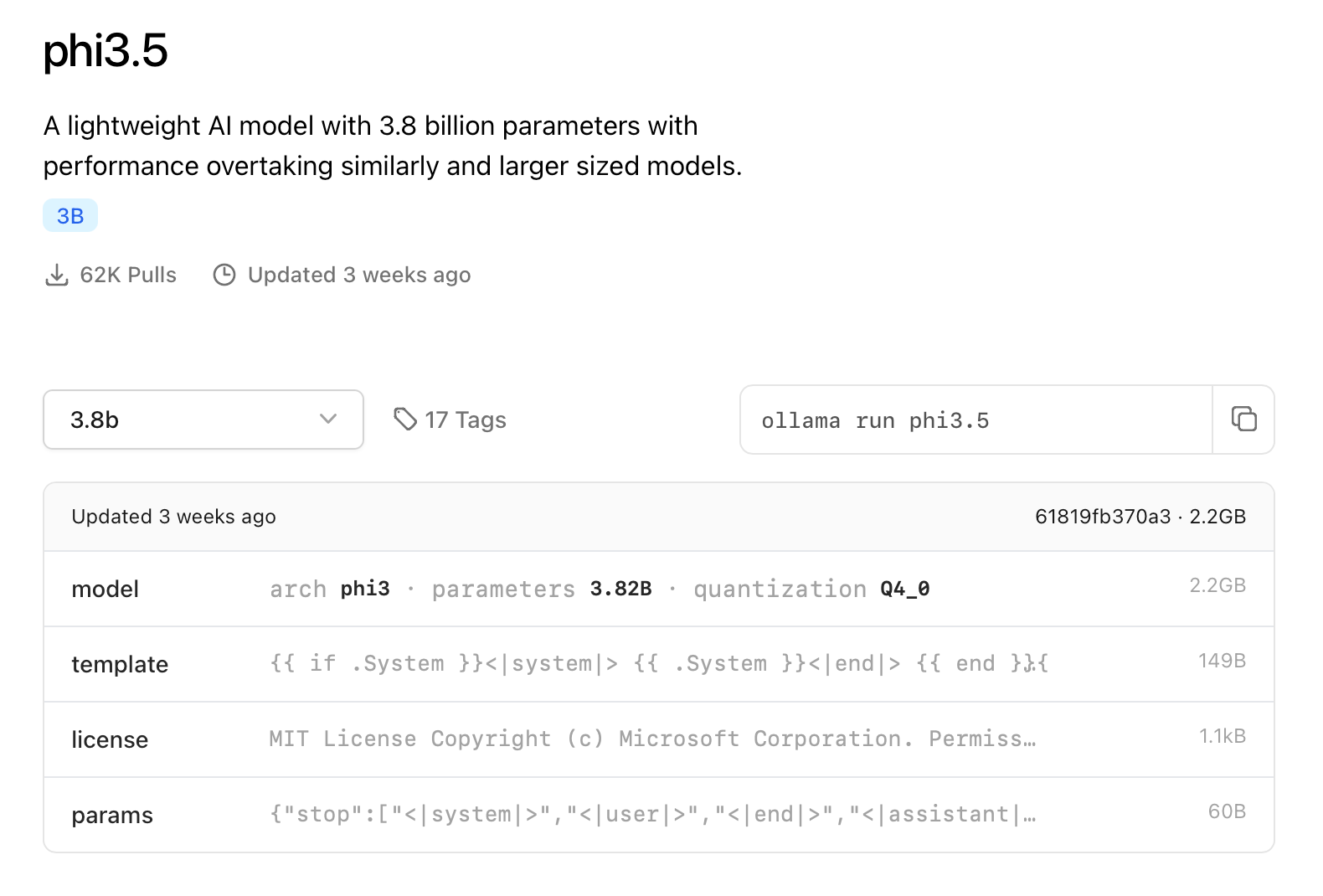

While Llama 3.1 is often the go-to model for most people just starting out with Ollama, there are other models that you can try. While Llama 3.1 is a great starting point, you may want to explore other models, such as lighter ones that better suit your system’s performance.

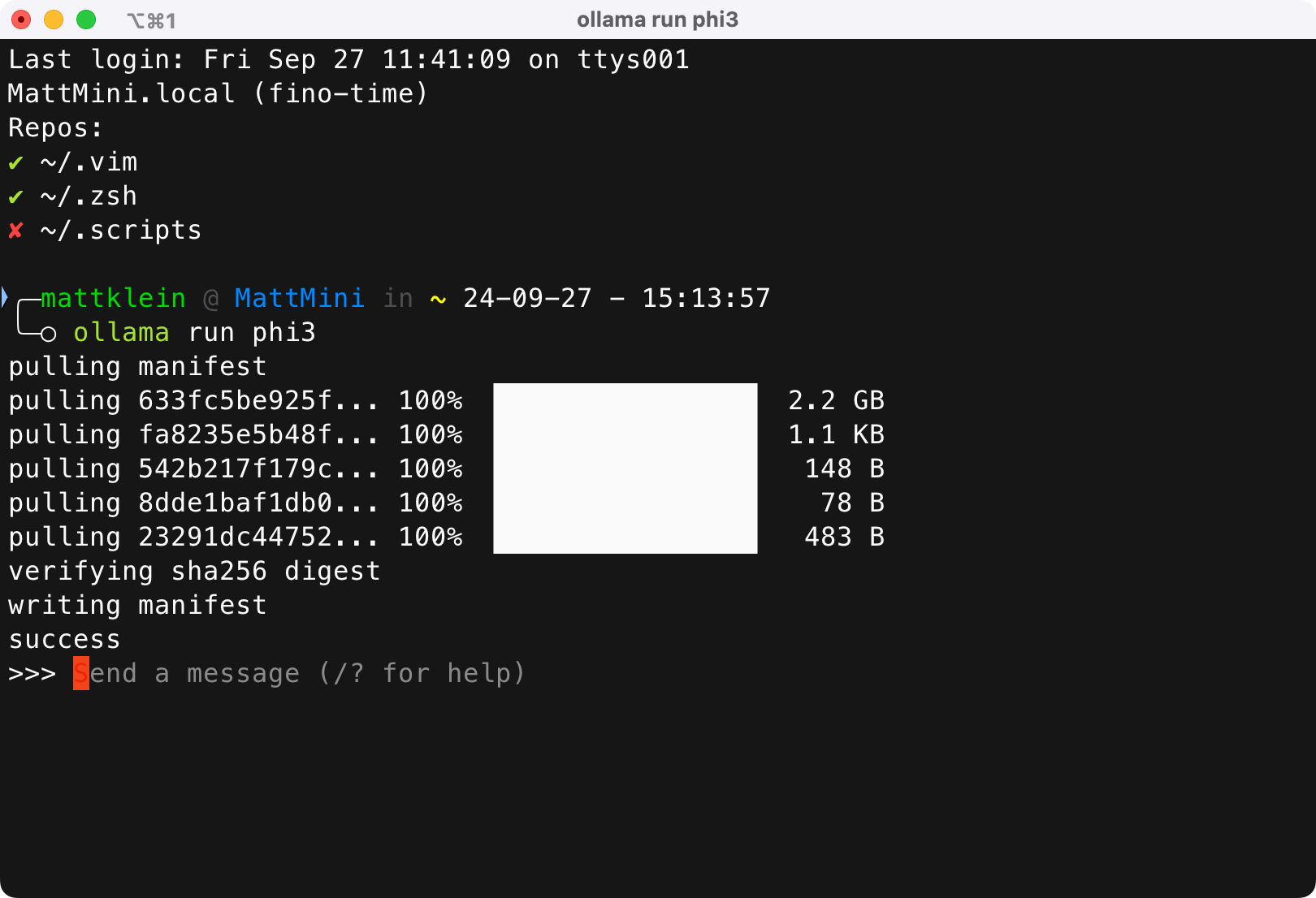

When you find a model you think might work for you, your computer hardware, and particular needs, you just execute the same command as you did for Llama 3.1, for example, if you want to download Phi 3:

ollama run phi3Again, if this is your first time using the model, Ollama will automatically fetch, install, and run it.

Other Commands You'll Want to Know

Ollama has quite a few other commands you can use, but here are a few we think you might want to know.

Models take up significant disk space. To clear up space, remove unused models with:

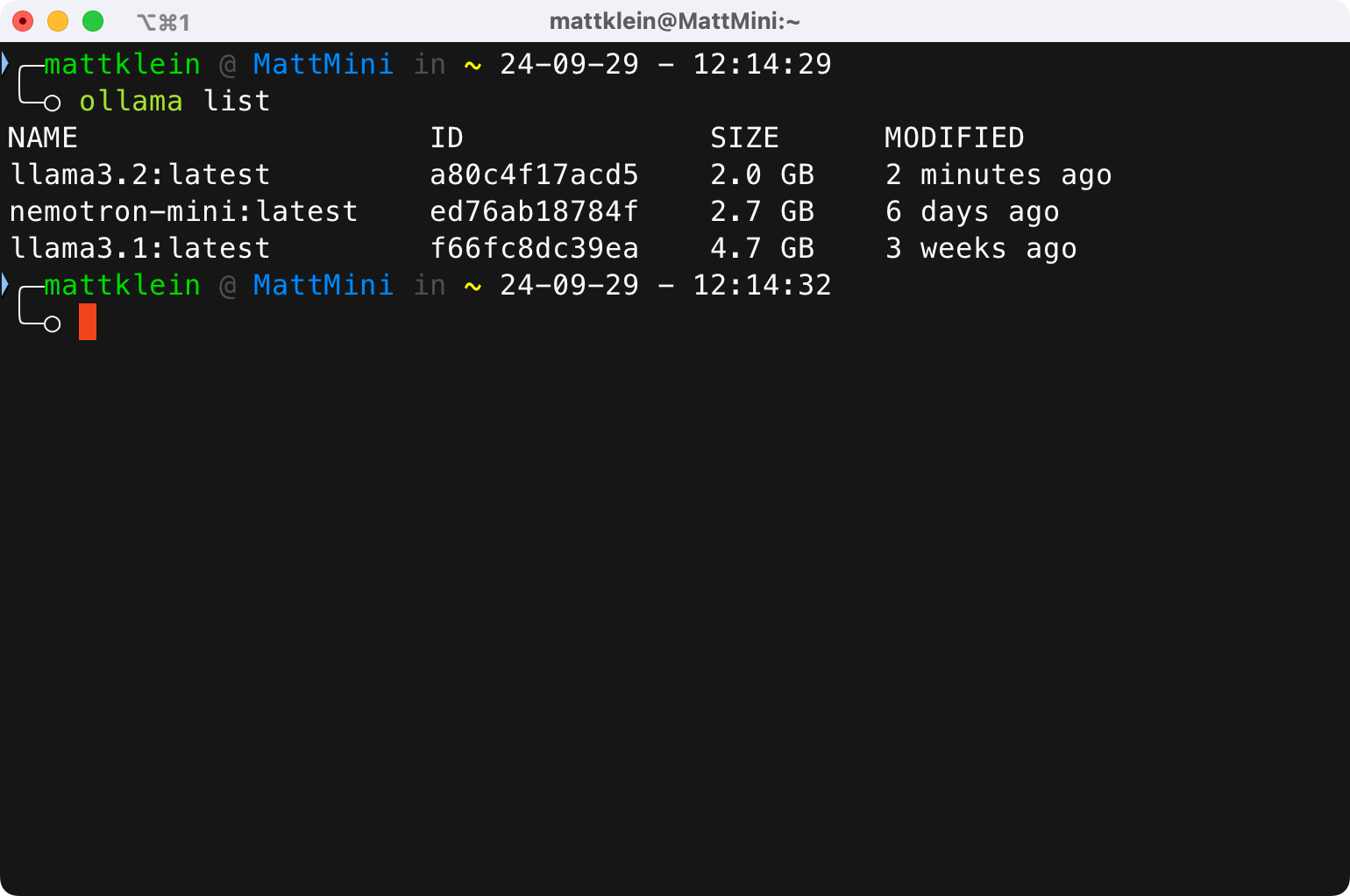

ollama rm modelnameTo view models you’ve already downloaded, run:

ollama listTo see which models are actively running and consuming resources, use:

ollama psIf you want to stop a model to free up resources, use:

ollama stopIf you want to see the rest of Ollama's commands, run:

ollama --helpThings You Can Try

If you've held off on trying AI chatbots because of concerns about security or privacy, then now's your time to jump in. Here are a few ideas to try to get started!

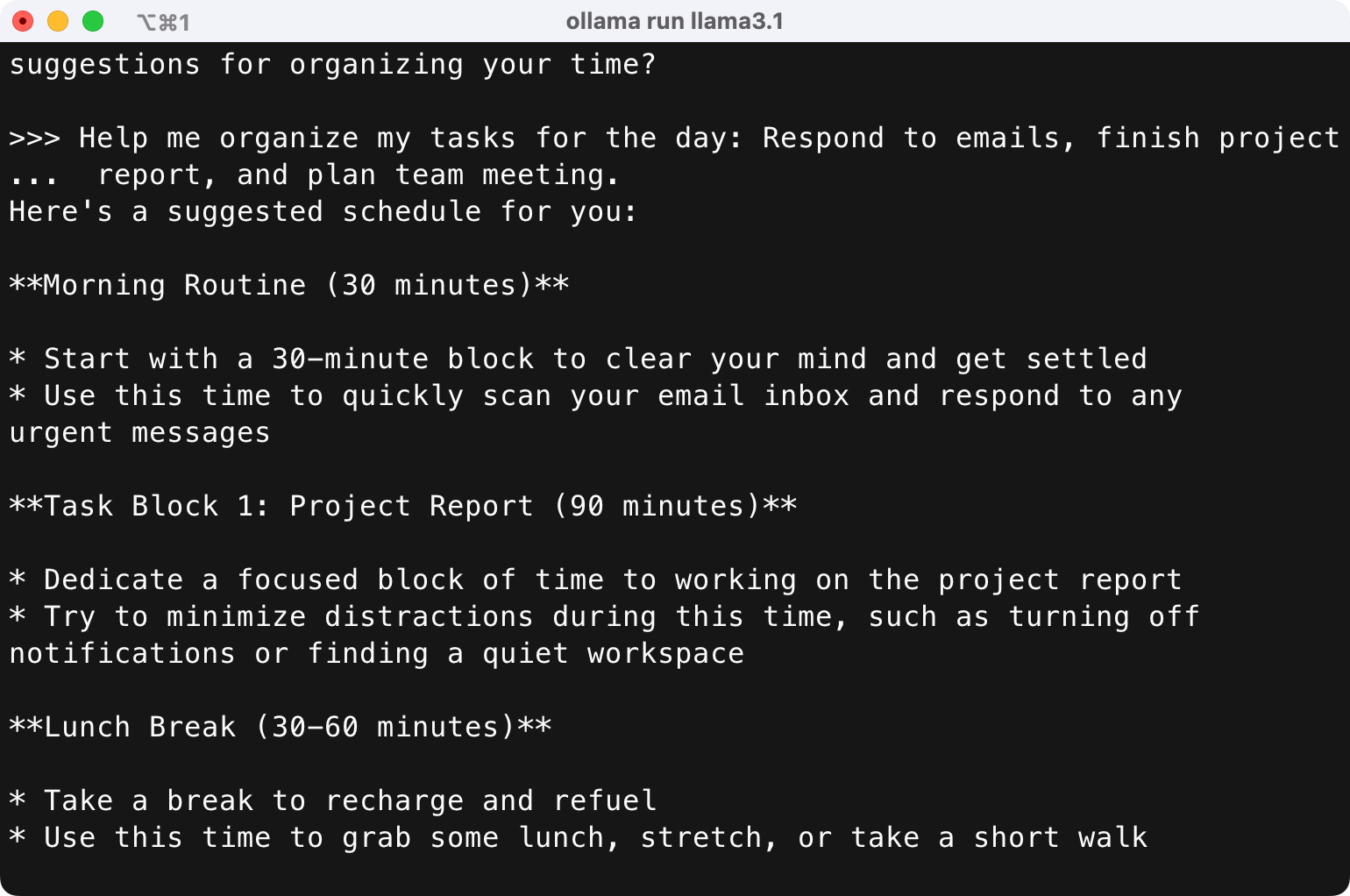

Create a to-do list: Ask Ollama to generate a to-do list for the day.

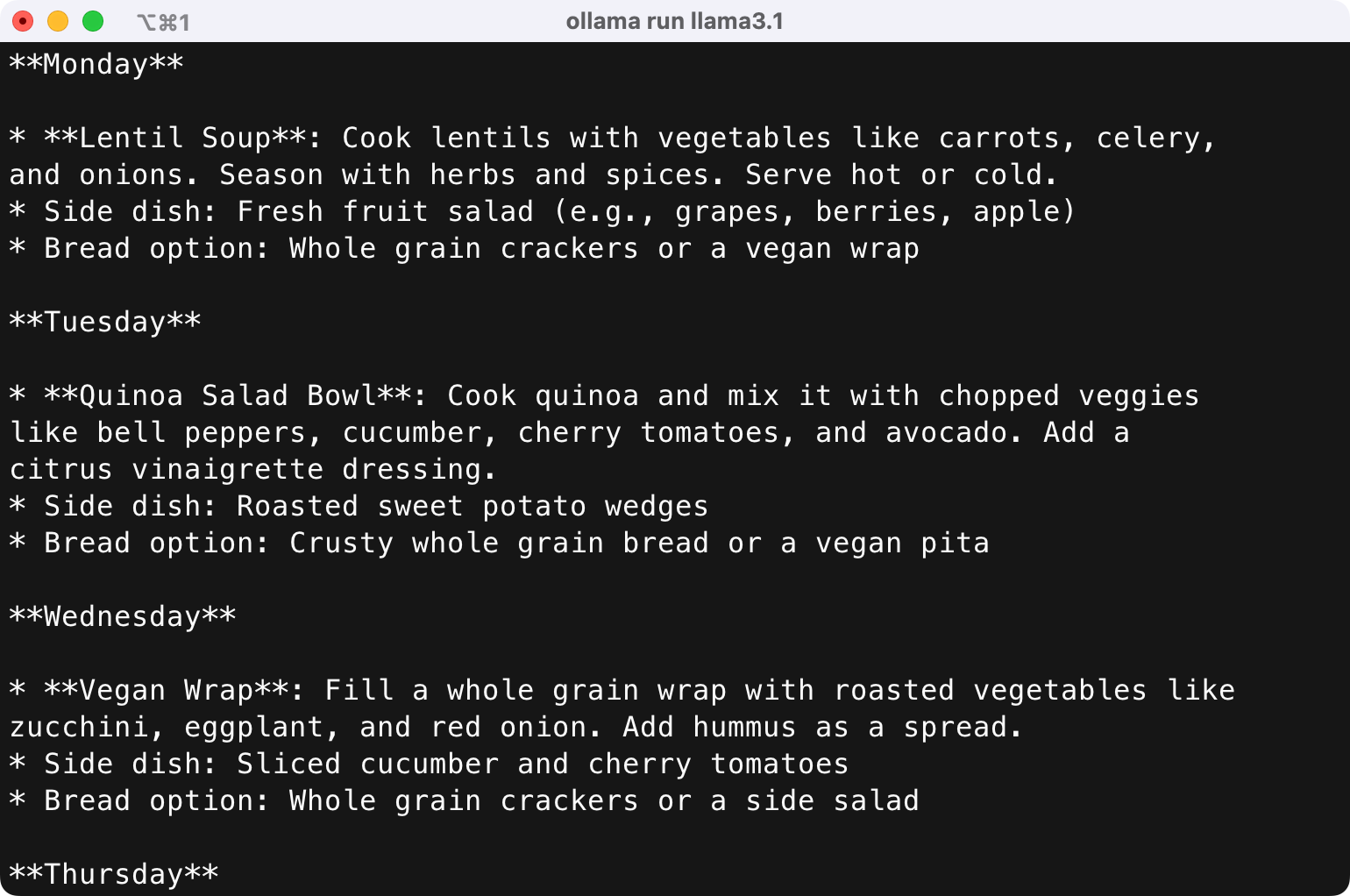

Plan lunch for the week: Need help planning meals for the week? Ask Ollama.

Summarize an article: Short on time? Paste an article into Ollama and ask for a summary.

Feel free to experiment and see how Ollama can assist you with problem-solving, creativity, or everyday tasks.

Congratulations on setting up your very own AI chatbot at home! You’ve taken the first steps into the exciting world of AI, creating a powerful tool that’s tailored to your specific needs. By running the model locally, you’ve ensured greater privacy, faster responses, and the freedom to fine-tune the AI for custom tasks.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.

Comments